Skyvia test - Salesforce to database

28 Jun 2022

Whether I can trust it with corporate data is another question, but my first question about Skyvia, which promises to intelligently back up Salesforce orgs into enterprise relational database management systems, works as well as it says it should.

Is it really possible to let Skyvia “play DBA” and keep relational database table schemas automatically in sync with the schema changes Salesforce admins make?

To find out, I signed up for Skyvia’s free tier, signed up for a Salesforce dev org (which comes with data prepopulated in it), and provisioned a little empty PostgreSQL database in AWS RDS. I thought about doing Oracle or SQL Server, but this spun up the fastest and was within the limits of my AWS learner account.

I punched in credentials for both ends as “Connections” in Skyvia.

Then I set up a “Replication” (under “Packages”) in Skyvia from Salesforce to the database only doing the “Account” & “Contact” tables (there are almost 800 table types in a dev org – woah!) and clicked Run.

- My run stayed in “queued” state for quite a long time.

- About 5 minutes in, it finally flipped to “Running.”

- It had 33 “success rows” within about a minute after that, and within another minute or so, it had logged that it had completed the job.

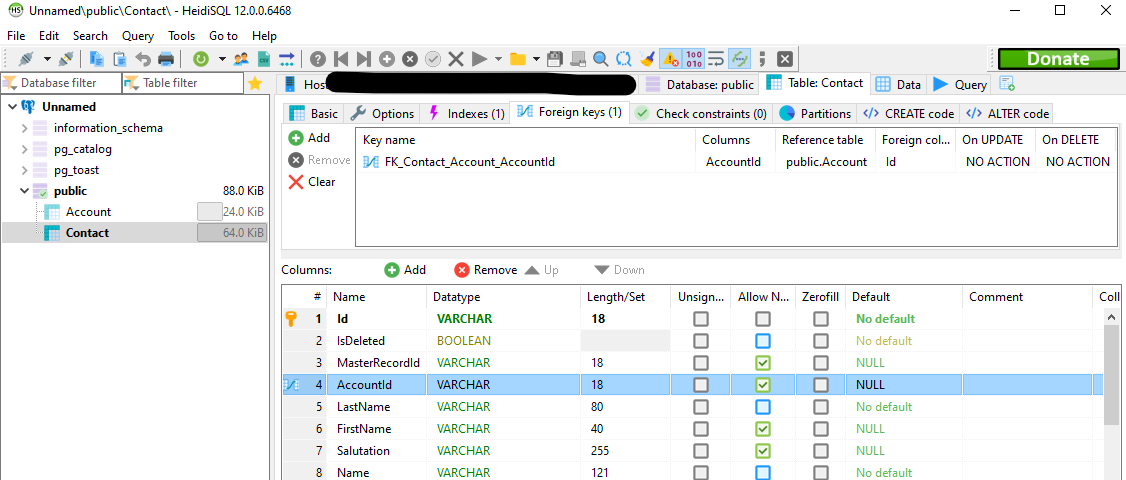

Once I saw that, I logged into my database with HeidiSQL and noted that in the public schema, there were now public.Account and public.Contact tables, with column names and data types that seemed perfectly reasonable (actually, public.Contact.AccountId was even set as a foreign key to public.Account.Id, as promsied by Skyvia).

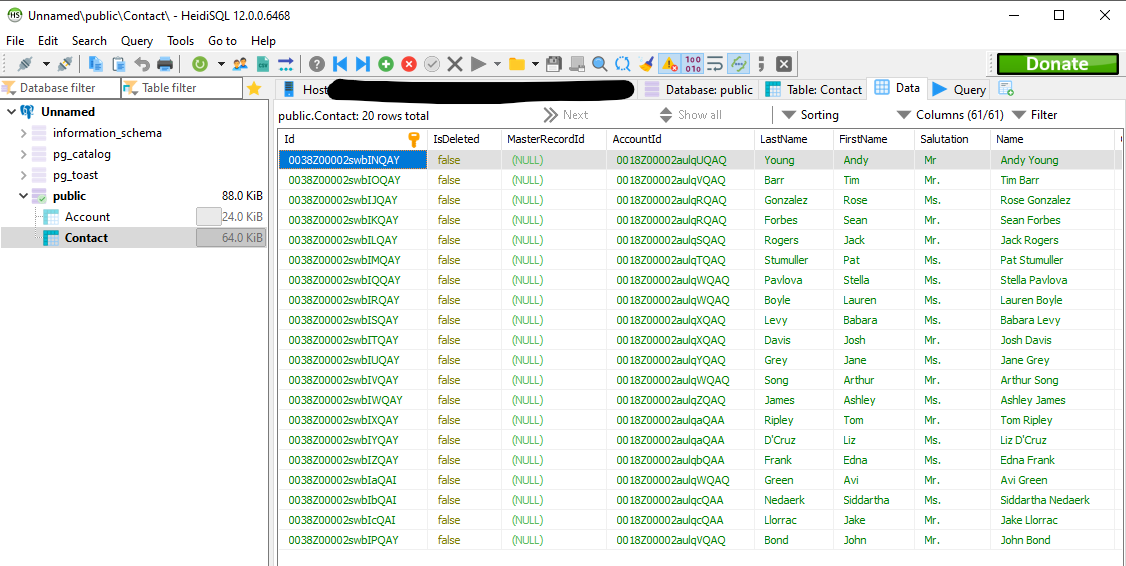

Data looked good in the tables.

Neat.

Skyvia’s head office is in the Czech Republic, with additional offices in Hong Kong, Slovakia, and Ukraine, so I have no idea if my company would go to all the work to security-audit them and potentially strike a deal with them, but that was pretty darned slick.

I went into the Skyvia “Replication” I’d just run and edited it to select all nearly 800 tables.

When I did, it asked me to choose an option and click Save from the following prompt:

The package schema was modified

- Save package and reset LastSyncTime Next time a full replication is performed, and, depending on package settings, tables are re-created.

- Save package without resetting LastSyncTime You may need to manually modify target database tables according to the package changes.

Oof – no way am I manually modifying target database table definitions. That’s Skyvia’s job! My team’s sick of maintaining Salesforce-clone database schemas.

I’m choosing the first option.

Question for my readers: is Skyvia alone in making it ridiculously easy to keep up with admins’ schema changes (you just go into their panel, un-check a box above the full-list of tables, re-check it, and choose “save package and reset”) and to schedule a backup where the database DDL is done by the backup tool instead of by in-house DBAs?

Or are there other companies offering this same service?

I know there are plenty of ETL tools out there that can connect to a database, but this thing where Skyvia does the DDL for you, not just the DML, is NIIIIIIIICE.

Who are all the players in that market?

Suggestions received so far:

- CodeyKyle thinks CData takes care of the database-side DDL.

- SuperSFDuder182 asked a similar question on the Salesforce reddit in mid-2021.

Update: my 800-table run failed w/ the following error message:

“The source object ‘AccountShare’ has neither ‘CreatedDate’ nor ‘SystemModstamp’ column and cannot be used when the ‘Incremental Updates’ check box is selected.”

LOL, rude!

OK, so there’s a bit to learn, and maybe “keeping up with the Salesforce admins” won’t be quite as simple as just un-checking and re-checking an all-tables box, but … on the other hand, this user interface is so slick, why would a DBA need to get involved, other than during punching a password into the Connections box, maybe? Otherwise, admins who actually know their Salesforce tables can just be in charge of deciding what to sync and which clicky-boxes in Skyvia deliver the best results to the database tables Skyvia creates.

PostgreSQL note:

Setting up a database server in AWS took all day to figure out.

I had to do a “standard” RDS create, not “easy,” because I had to tell AWS to create brand new security groups, VPCs, subnets, etc. for my new database. (Also, I had to remember to set it to public access.)

Finally got AWS set up so it would let Skyvia connect to it from its 3 possible IP addresses of 52.190.252.0, 40.118.246.204, & 13.86.253.112. (Okay, actually who am I kidding … I changed my own IP address as found in the VPC inbound rules that AWS set up for me to to 0.0.0.0/0 or something like that since my enter learning sandbox was going to be gone within hours anyway. Was my potential participation in a botnet really going to bring down the internet right then?)

Obviously, this is not the right way to set up a long-lived database, or a database with sensitive data in it.

Update: I figured out Pulumi YAML to provision a PostgreSQL database in AWS with proper networking (thanks for the education about VPCs, subnets, & Security Groups, Andy Shinn):

resources:

the-default-vpc:

type: aws:ec2:DefaultVpc

my-database-sec-grp:

type: aws:ec2:SecurityGroup

properties:

name: pulu-database-sec-grp

description: "I made this security group in Pulumi for my RDS instance"

ingress:

- cidrBlocks: # Don't forget to add a desktop IP address, too, if you want to test locally

- 52.190.252.0/32 # Skyvia IP address 1 of 3

- 40.118.246.204/32 # Skyvia IP address 2 of 3

- 13.86.253.112/32 # Skyvia IP address 3 of 3

fromPort: 5432

protocol: "tcp"

toPort: 5432

vpcId: ${the-default-vpc.id}

my-postgres-rds:

type: aws:rds:Instance

properties:

allocatedStorage: 20

availabilityZone: us-east-1a

backupRetentionPeriod: 7

backupWindow: 03:23-03:53

caCertIdentifier: rds-ca-2019

copyTagsToSnapshot: true

dbName: "a-cool-database-name"

engine: postgres

engineVersion: 13.4

identifier: pulu-rds-pgrs

instanceClass: db.t3.micro

licenseModel: postgresql-license

maintenanceWindow: sun:09:38-sun:10:08

maxAllocatedStorage: 1000

optionGroupName: default:postgres-13

parameterGroupName: default.postgres13

port: 5432

password: "a-great-database-admin-password"

publiclyAccessible: true

skipFinalSnapshot: true

username: "a-great-database-admin-username"

vpcSecurityGroupIds:

- ${my-database-sec-grp.id}

outputs:

# Export some details about the database to system output when running "pulumi up"

databaseAddress: ${my-postgres-rds.address}

databaseEndpoint: ${my-postgres-rds.endpoint}

defaultVpcId: ${the-default-vpc.id}

mySgId: ${my-database-sec-grp.id}